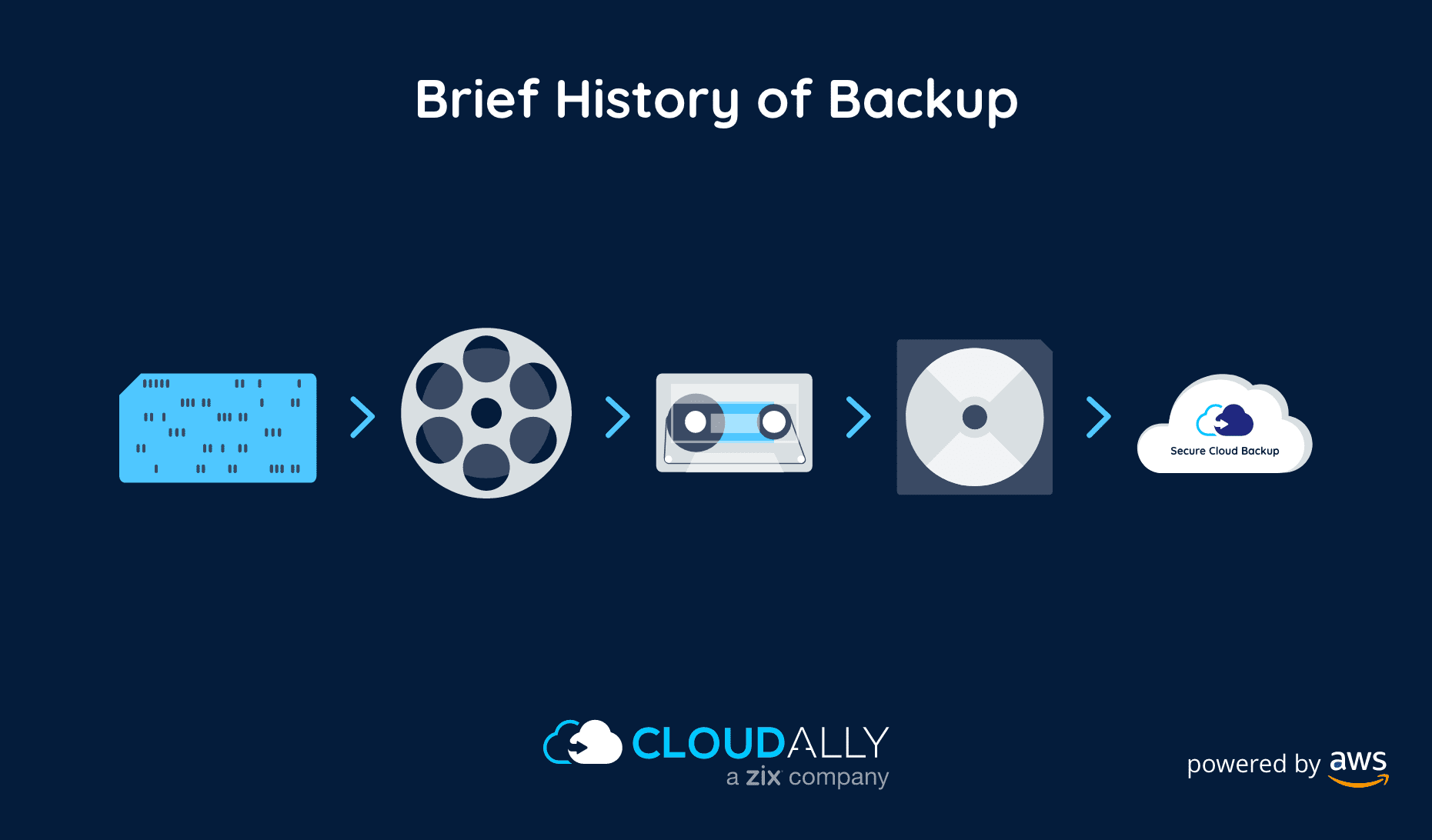

For those people who worked with computers before the advent of Personal Computers, IBM’s latest announcement of their new tape cartridge having the capacity of 152TB of data, leaves us with a feeling of amazement and nostalgia. For me, the nostalgia is all the more personal, as I look back at my journey in the backup industry. As a part of CloudAlly, we launched SaaS backup for Google Apps (now Google Workspace) and Salesforce, back in 2011. This year, as we complete a decade, I thought it fitting to trace the evolution of backup. Here’s detailing the history of data backup – from the tape to the cloud.

Freedom From SaaS Data Loss

BOOK A DEMO

History of Data Backup: Evolution of Devices

Punch Cards For Basic, Limited Processing

The earliest “large” storage media was probably punch cards with which you could store your program or calculation results. The punch card technology that came in with the Industrial Revolution could collect and store large amounts of data. IBM found its roots with the punch card and expanded its use beyond data entry, processing, and storage.

Punch cards were closely followed by paper tapes. Unfortunately, these media types were not particularly reliable and could easily be damaged.

Magnetic Tape Storage To Support Complex Data Processing

In the early days of computing, computers were more for numerical calculations and less for data processing. Thus, storage requirements were limited to programs and output results. Once computers started to be used by organizations for data processing, the need for backup storage became obvious. Organizations realized that data was what kept the business running, they knew it had to be protected. It needed to be protected not so much from hackers but from internal loss or destruction. For example, the computer site burned down, or the data is corrupted by a software update. In these catastrophic situations, you want to be able to restore a copy of the data from a good copy.

Although most of the attention was on the rapid increase of speed and capacity of hard disks, backup storage, such as the magnetic tape were also improving in leaps and bounds. When IBM released their new IBM 701 in 1952, it came with a 925-pound floor standing box that could read and write tapes with fast stop and start times. The magnetic tape had what at that time seemed to be the monumental capacity of 2.3MB of data. Magnetic tapes became so popular that if you wanted to show a computer working, you showed the tape drive whirring away. The magnetic tape became the de-facto long-term storage for a backup device.

This led to an industry of off-site magnetic tape storage. It mitigated the risk of backup tapes being kept on-site and liable to damage.

Floppy, CDs, DVDs: The New Age of Exceptionally Portable Backup

The 1970s saw the advent of the floppy disk followed by writable CDs/DVDs, and flash drives. It overtook magnetic tape storage by being both transportable and cost-effective. Data could be easily stored and moved between devices. However their capacity was limited, and there was the risk of damage to the backed-up disks and drives when stored in the same location as the business.

The Return of the Hard Disk Drive, SD cards, and Flash Drives

Shortly after the magnetic tape, in the 1950s, IBM developed the first Hard Disk Drive or HDD. They started off extremely expensive, upwards of thousands of dollars, and could only store a few megabytes of data. Even though HDDs were hugely instrumental in the development of the personal computer, it was not a viable backup option in the 1950s.

It took until the 1990s for HDD to become popular again. This was driven by a reduction in cost and an increase in the capacity of HDDs. HDDs were not only faster, but they could also be set up to run automated backups.

Backup to Support Distributed Computing

The 1980s and 1990s saw the rise of distributed computing. Instead of an organization centralizing all computing work with a large mainframe in a corporate data center, the computerization was decentralized and moved closer to where the work and data were centered. This meant that branch offices or subsidiaries would run their own data processing needs on mini-computers. Mini-computers were significantly cheaper than the mainframes and could be installed in normal offices. This meant that a cheaper solution was needed for backups.

The magnetic tape drives had become more powerful but were still expensive and required special conditions. The technology that evolved was magnetic tape cartridges. They were smaller, cheaper, and faster with a capacity of up to 100GB of data. This solved the size and cost for mini-computers but the problem of how to store the backup still remained.

This in turn fueled the growth of companies that offered the service of picking up the magnetic media used for daily backup and storing them in well-protected and environmentally safe locations.

History of Data Backup: Location of Backup – Onsite, Off-site

If the data was not too volatile, a daily backup means that up to one day’s work might be lost if a disaster occurs. However, in some situations, you might want to recover data further back than the previous day. The common solution was the grandfather, father, and son backup relationship. At least three generations of the daily backup were kept. The backups from yesterday, the last week, and the last month were kept either on or off-site.

If the backups were stored on-site they had to be somewhere well-protected from both theft and fire. If the backup was off-site, the organization needed to ensure the backups were regularly picked up, well-secured and that there would be an easy method to return them if a restore was required.

History of Data Backup: Types of Backup – Full, Daily, Weekly, and Incremental Backup

As tape capacity increased, so did the amount of data to be backed up increase. Backups to tape were normally done overnight, as this was the time that the systems could be taken down and the data backed up. However, as data increased exponentially there was the nightly race if the backup would complete successfully before the systems need to go back online.

To solve the problem of the far-too-long full backup, software solutions were found. In most situations only a small percentage of the data was changed, so why backup everything every night? The simplest solution was therefore to perform a full backup once a week and then a daily backup. During the daily backup, only the data that had changed since the previous backup or the beginning of the week was backed up. This meant that the backup times were significantly reduced, at the cost of a longer and more complex restore operation. You needed to restore the complete weekly backup and then the relevant daily ones.

Data Backup Today: The Advent of SaaS Backup

The 2000s brought in cloud computing with its effortless scalability and powerful applications for collaboration. Companies moved en masse to adopt cloud platforms and to harness the tremendous power of the cloud. Cloud backup followed soon, with organizations being offered a simple and cheaper usage model. You only pay for what you use – computational power and storage usage. This means there are no initial outgoings, you only pay for what you use, and it is very scalable.

With the rise of public cloud usage, business-critical data was moved to cloud platforms like Microsoft 365 (formerly Office 365), Google Workspace (formerly G Suite), and Salesforce. However, the incredible “availability” of data on the cloud led to the misconception that this SaaS data was immune to loss. There had to be a version somewhere on the cloud, right? While SaaS platforms are stringently secure, you “control” the data. If someone mistakenly deletes it, maliciously corrupts it, or a sync error wipes out data – it will be lost. In addition, the age of the internet also brought in malware, phishing scams, and ransomware, adding to the risk of SaaS data loss. CloudAlly traces its origin to this realization, by our founder Avinoam Katz, that SaaS data needed to be backed up. And where better to back it up than on the cloud itself. With that, we launched the first SaaS backup for Google Apps (now Google Workspace) and Salesforce in 2011. In the past decade, CloudAlly has developed a suite of best-of-breed cloud-to-cloud data backup solutions for Microsoft 365, Google Workspace, Salesforce, Box, and Dropbox.

Concluding Thoughts

The increase in storage capacity has been exceptional. From 2.3MB to 157TB in a lifetime and that is without adding in the capacity of large modern tape libraries.

However, a few axioms have never changed:

- Backup up your valuable data, no matter where it is located.

- Ensure that both the backup and restore operations are simple and sufficiently fast for your business needs

- Be paranoid and periodically store an additional backup somewhere else

- You can never perform enough backups.

- Never assume some other organization is backing up your valuable data. It is your valuable data and you are responsible for it – no one else.

Start backing up your data with CloudAlly’s pioneering and best-of-breed SaaS backup.